The importance of ethics in the use of AI is going to become as big as AI itself

If a week is a long time in politics, it’s light years in AI; yet the two timescales have and continue to intersect. For example, the scandal about the role of Cambridge Analytica in the US election that resulted in Donald Trump becoming president and its influence on the Brexit vote. This week Sam Altman, CEO of OpenAI (creator of ChatGPT, which has entered mainstream consciousness at warp speed) called on US legislators to regulate AI, expressing particular concern for its potential to generate “interactive disinformation” in the run-up to the presidential elections next year.

Ethics and AI is a super-hot subject that will be with us from here on in, and an immense responsibility. The question is whose responsibility and who can be trusted? Even knowing where to start is a big, sticky question.

We turned to Reggie Townsend who is VP, Data Ethics at SAS. In April 2022, he was appointed to the US’ National Artificial Intelligence Advisory Committee (NAIAC) which advises the president and is involved with equalai.org which describes itself as “leading the movement for innovative, responsible and inclusive artificial intelligence”. Townsend is usually based in Chicago but he will be on tour at the Royal Institution of Great Britain on 7 June in London.

Start with principles…

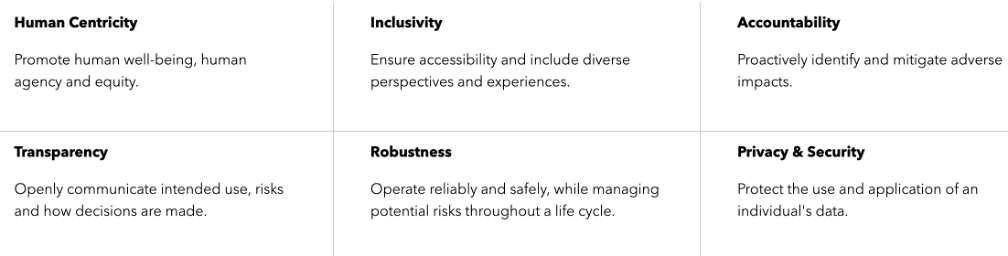

Townsend explains, “We started our Data Ethic Practice here at SAS [in May 2022] with the idea of ensuring that we have a consistent, coordinated approach to deploying software in a trustworthy manner. We’ve gone about establishing the principles necessary to do that [shown below].”

…then operationalise them using a model

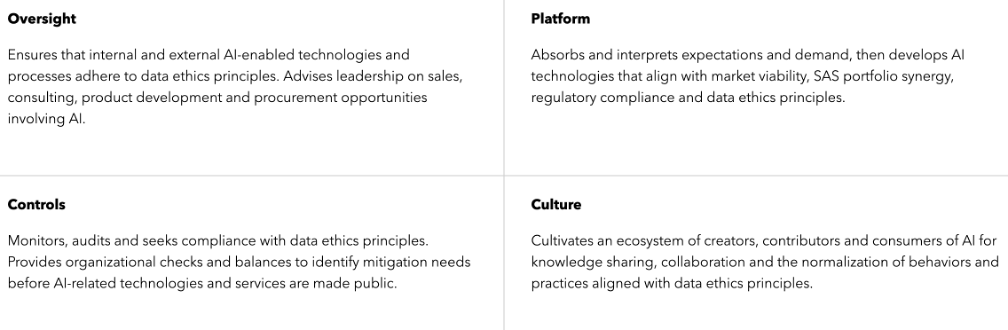

The next step is for SAS to operationalise them, using a four-pillar model, called the quad, which maps back to those principles:

Townsend stresses, “The approach to trustworthy AI is more than a technology approach. It’s no coincidence three-fourths of our quad is focused on non-technology. The technology component, which is a platform, is where we interface directly with a product management and engineering teams, where we’re approaching trustworthy AI as a marketplace.”

Trustworthiness as a marketplace

SAS has identified six core requirements for that marketplace. Townsend says, “So you’ll see terms like mitigation, data management and model ops and so on. We have work in place with those teams, the product management and engineering teams, where we’re ensuring that our product is reflective of our principles. Many features that exist in our platform and day already are reflective of those principles that we’ve packaged up.

“Then we’ve got a very robust roadmap that starts looking into the future, short and long term, as to what we want to bake into the platform. We recognise that the market’s requirements won’t be entirely satisfied by us; it will partner where were we don’t provide capability or concede some areas. The point is not to satisfy everything: the point is to recognise the marketplace and approach it like a business.”

“Controls”, Townsend says, “is where we do risk management and auditing, activity performance management work. I don’t know how familiar you are with some of the pending regulation as it relates to AI but we looked at all the major bodies around the world such as in the European Union and South America.

“We pulled out the essential elements and we’re harmonising them into a risk management approach…so our customers benefit from a platform that is informed by the AI compliance requirements. We want to do is put them in a position to feel comfortable that if they build on SAS, they will be compliant with AI expectations, and they can ramp that up as those regulations solidify and grow.”

The final pillar is culture…we’ve been around for 46 years…and built a pretty good brand on trust. So this isn’t new work for the for the people inside of the company. What is new is the way we are pulling it together in a package yet being really consistent and coordinated around the globe. This is really important, particularly as we think about AI regulation, the potential harms associated with non-compliance.”

Ethics in healthcare

Theory aside, how’s the practice going? Earlier this month, SAS launched what it calls a first-of-its-kind ethical AI healthcare lab in the Netherlands (pictured above) and expanded its Data Ethics Practice, integrating its Data for Good programme into the practice. “With the swift advancement of AI, we must remain diligent in keeping humans at the center of everything we do,” Townsend said in a press statement.

SAS collaborated with the Erasmus University Medical Centre (Erasmus MC), which is one of Europe’s leading academic hospitals, and Delft University of Technology (TU Delft), home of the TU Delft Digital Ethics Centre. The three organisations jointly launched the first Responsible and Ethical AI in Healthcare Lab (REAHL) with the aim of addressing the ethical concerns and challenges related to developing and implementing AI technologies in healthcare.

REAHL brings together multidisciplinary teams of experts in AI, medicine and ethics with policymakers to collaborate on developing and implementing AI technologies that prioritize patient safety, privacy and autonomy. This includes ensuring that AI systems are unbiased, transparent and accountable, and used in ways that respect patients’ rights and values. The REAHL intends to create a framework for ethical AI in health care that will serve as a model for medical centres and regions around the world.

Healthcare aside, the SAS Data for Good team will seek to partner organisations where AI can be applied to improve society. It has collaborated with non-profit organisations to fight deforestation in Brazil and to explore discrimination in housing in New York City. It will work with the UNC Center for Galapagos Studies to apply crowd-driven AI and machine learning to help protect endangered sea turtles.