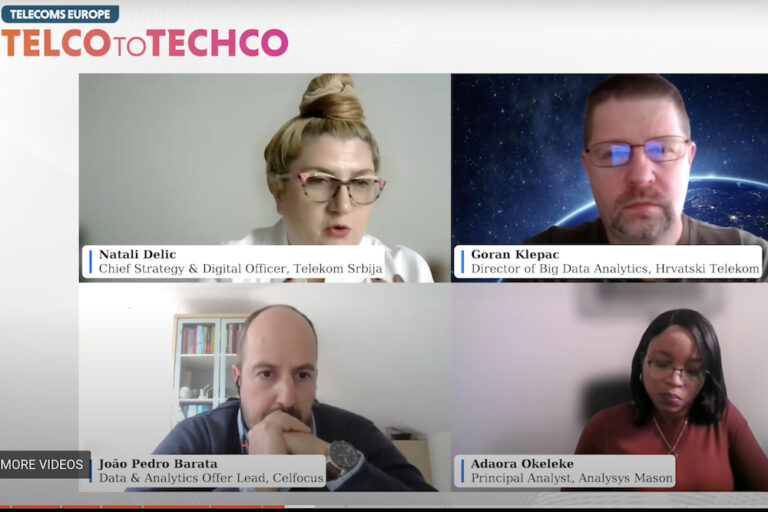

Telekom Srbija, Hrvatski Telekom and Celfocus share their experiences around moving data ops and analytics to the cloud in the telco industry

The discussion took place at our recent Telecom Europe Telco to Techco virtual event, moderated by Analysys Mason principal analyst Adaora Okeleke. Our panellists were Telekom Srbija chief strategy & digital officer Natali Delic, Hrvatski Telekom director of big data analytics Goran Klepac and Celfocus data & analytics offer lead João Barata.

WATCH THE FULL SESSION HERE

Moving towards self-service analytics in telcos

Telekom Srbija’s Delic acknowledged the prevalent hype surrounding Generative AI but emphasised the importance of focusing on fundamental aspects such as data management processes and organisational education. She stated: “There is a huge rise of demand for advanced analytics for quality, generative AI and for AI solutions, but we are still dealing more with fundamentals.”

The telco is prioritising efforts to transition towards self-service analytics and optimise the utilisation of technology to create value. “We need to go more to self-service mode, instead of having small groups of people dealing with all the analytics requirements of the company,” she said. Additionally Telekom Srbija is evaluating on-premises versus cloud solutions, to align with its future objectives. Overall, the focus remains on leveraging AI to enhance operational efficiency and improve overall performance.

Hrvatski Telekom’s Klepac underscored the recurring pattern of technological hype, emphasising the need to prioritise data organisation and democratisation over adopting new technologies like AI. “What is the most important thing you have to think about the data how to organise the data, how to make data democratisation,” he said. He cautioned against solely relying on sophisticated AI tools like TensorFlow without a coherent strategy for data integration, warning that without such a strategy: “you will get garbage in garbage out.”

Choose your cloud battles

Klepac warned processing in clouds is not cheap and you have to understand which kind of the process is the mostly should be used in that way. He gives an example of a big data environment that was having Oracle issues impacting its ability to support some projects. The company he was consulting with moved the work to the cloud and while the results were better, they were still below expectations. “You can use, for example, traditional algorithms like associative algorithms or CN2 or something like that, but even if you’re moving to cloud the results will still be the same,” he said.

In this case the operator used frequent pattern tree to develop a local solution and solved the issue quickly – only using cloud services for some pre-processing. “The fact is that you have to understand the processes and you have to deeply understand how algorithms are working that you can use the best from both worlds.”

Delic discussed Telekom Srbija’s current project evaluating the transition of workloads to a hyperscaler cloud provider, emphasising the need for a thorough business justification to avoid potential cost without benefit. She said: “We created a plan from the beginning to see whether this will be justifiable from a business perspective.”

Two primary factors are propelling the telco’s cloud consideration: firstly, the flexibility and scalability offered by the cloud compared to on-premises infrastructure, particularly crucial for rapid model iteration. She said: “It’s not always possible to have elasticity when you’re on-premises which you have when you’re in the cloud.” Secondly, she stressed the cloud’s potential to accelerate innovation by providing easier access to experimentation and proof of concepts, particularly in AI solutions.

She said: “We are hoping to have easier access and an easier way to do a lot of experimentation and proof of concepts with many of the available cloud solutions.” This shift aims to prioritise business agility over infrastructure concerns, anticipating a faster pace of innovation facilitated by cloud adoption.

Using the cloud to test an on-prem scenario

Celfocus’s Barata highlighted three key points about moving to the cloud: the significance of operational costs, including both cloud running and data platform maintenance expenses, the necessity of scalability in managing increasing data volumes to become data-driven organisations, and the importance of business agility driven by AI and Generative AI use cases, prompting requests for IT infrastructure and capabilities.

He emphasised the need to reduce data platform maintenance costs and stressed that scalability and business agility are the primary drivers behind requests to move to cloud platforms. “I believe something is something that when we see our clients they say they are trying also to reduce the cost of maintaining a specific data platforms for the infrastructure purpose, but also for maintenance purpose,” and concluded, “But if I need to select just one problem scalability or business agility is driving more these cloud requests to move to platform.”

Data privacy is top of mind

Telecom Srbija’s Delic addressed several crucial points regarding cloud adoption and data privacy. Firstly, she emphasised the challenge of securely connecting on-premises environments with hyperscalers, particularly in hybrid environments. She notes, “It’s not realistic that everything will be soon in the cloud, it will be combined.”

Secondly, she highlighted the local interest in data privacy and the need for meticulous planning and compliance with laws. “Most of the companies do have some processes around it,” she said. Delic underscored the importance of evaluating data processing and ensuring compliance with legal requirements when considering cloud migration. She concluded by stressing the significance of data management and processing in the context of cloud adoption, emphasising that it’s more about processes than just technology. “So it’s not so much about technology. It is a little bit if we need anonymisation, if we need data masking, because for some use cases, there’s some data this might be required, but in general, it’s again more on the process of how we manage this data how available for some other for analytics in general.”

WATCH THE FULL SESSION HERE

The discussion also explored:

• GDPR compliance is crucial when moving to the cloud, prioritising data protection and privacy

• Organisations must minimise risks, especially in cloud environments, despite their inherent security

• Data preparation and cleaning are essential for data science and AI projects, with significant cost-saving implications

• Planning data migration to the cloud strategically can optimise costs by eliminating redundant or unnecessary data

• Smart processing methods can further reduce costs and enhance efficiency in cloud usage

• Effective planning and data quality preparation are key to cost-saving and efficient cloud utilisation

• The cloud serves as an ideal sandbox for testing methodologies and proof of concepts but requires conscious cost management

• Proper data quality preparation can significantly reduce processing costs and maximise cloud benefits

• The role of AI in data analytics

• Why telcos need to be wary about the hype surrounding AI

• On-prem versus cloud for data and analytics

• Using cloud for pre-processing

• Testing models and running tests in parallel

• Eliminating infrastructure concerns for faster innovation

• Advancing towards security objectives through innovation and process improvement

WATCH THE FULL SESSION HERE